Google’s AI-Powered Smart Glasses: A Deep Dive into the Future of Wearable Tech

During the “Humanity Reimagined” segment at TED2025 in Vancouver, Google’s Head of Android XR, Shahram Izadi, donned the futuristic smart glasses prototype, demonstrating seamless AI-driven features on a first look. Unlike earlier smartphone-tethered efforts, these glasses aim for standalone operation, harnessing on-device processing for AI tasks and significantly reducing reliance on external devices. With a design that mirrors everyday eyewear, they integrate a high-resolution micro-display, a front-facing camera, microphones, and bone-conduction speakers to maintain comfort and discretion.

Key Features

1. Real-Time Language Translation

Equipped with Gemini AI, the glasses translate spoken languages on-the-fly, turning live conversations in Farsi, Hindi, or any of over 40 supported languages into clear subtitles within the user’s view.

2. AI-Powered Memory Recall

The standout “Memory” feature continuously indexes visual inputs, enabling users to ask questions like “Where did I leave my keys?” and receive precise location cues based on the device’s stored video timeline.

3. Immersive Navigation Overlays

Integrated with Google Maps, the glasses project turn-by-turn directions directly onto the lenses, combining 2D instruction overlays and a compact 3D minimap to guide users hands-free.

4. Contextual Information Retrieval

Users can point at objects — be it a museum exhibit or a plant in the wild — and receive on-the-spot contextual insights and explanations, powered by multimodal AI processing.

Design & Technical Specifications

Google’s smart glasses weigh approximately 200 grams, ensuring all-day wear without strain . They run on the Android XR platform — a tailored branch of Android for AR/VR devices — and leverage Qualcomm processors optimized for AI inference . The micro-display uses optical waveguides for crisp visuals even in bright conditions.

Use Cases & Benefits

- Travel & Tourism: Live translation and navigation transform sightseeing and border-crossing experiences.

- Enterprise & Field Work: Hands-free data recall and navigation boost efficiency in warehouses, factories, and logistics.

- Accessibility: Real-time captions and visual prompts aid users with hearing or memory impairments.

- Education & Training: AR overlays turn complex instructions into intuitive, in-field tutorials.

Competition & Future Outlook

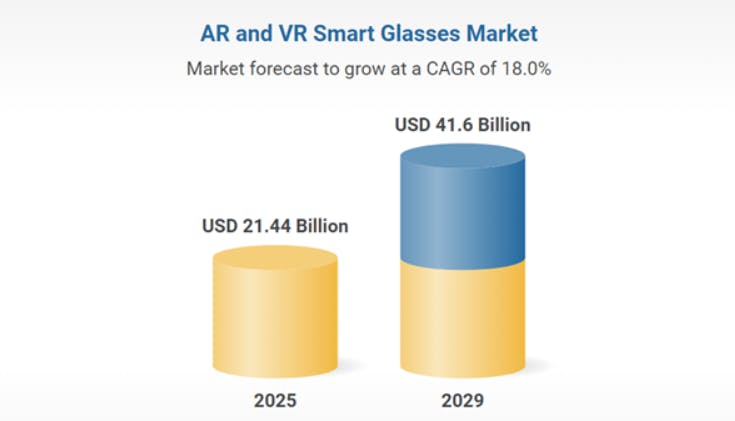

With Apple refining Vision Pro, Meta expanding Ray-Ban glasses, and Samsung backing Project HAEAN, Google’s 2026 collaboration with Samsung positions it at the heart of a burgeoning AR/AI arms race . Each player’s unique combination of hardware design, AI integration, and ecosystem strength will shape the wearable landscape.

Conclusion

Google’s AI-powered smart glasses mark a watershed moment in wearable tech, merging AR and AI to deliver translation, memory recall, navigation, and contextual insights — all without a smartphone . At Rysysth Technologies, we’re thrilled to see this innovation paving the way for more intuitive, hands-free computing. When they launch in 2026, these glasses will redefine productivity, accessibility, and user interaction in daily life.